Infinity with Max Cooper — In Six Minutes

Only I discern—

Infinite passion, and the pain

Of finite hearts that yearn.

—Robert Browning

contents

The video was created with a custom-made kinetic typography system that emulates a low-fi terminal display. The system is controlled by a plain-text configuration file that defines scenes and timings—there is no GUI. There is also no post-processing of any kind, such as After Effects. Everything that you see in the final video was generated programatically. The code was written over a period of about a month.

This page describes this system's design in detail. Fair warning: it gets into the weeds quite a bit.

The original music score for Aleph 2 is 6 minutes and 34 seconds in length.

The score tempo is 118 bpm (1.967 beats per second, 0.082 beats per frame). There are 194.1 measures in the video (29.5 measures per minute, 0.492 measures per second, 0.020 measures per frame).

The video format is 24 fps, so the track comprises 9,473 frames (1440 frames per minute, 48.814 frames per measure, 12.203 frames per beat, 3.051 frames per 16th note).

The typography uses the Classic Console font, expanded by me to include set theory characters such as `\aleph`, `\mathbb{N}`, `\mathbb{R}`, `\notin`, `\varnothing` and so on.

The video is 16:9 and each frame is rendered at 1,920 × 1,080. Text is set on a grid of 192 columns and 83 rows (maximum of 15,936 characters per frame).

The entire video is first initialized as an `(x,y,z)` matrix of size 192 × 83 × 9,473 (150,961,728 elements). The `z` dimension is the time dimension and each matrix slice, for example `(x,y,1)`, corresponds to the first frame.

The video is then built up from a series of scenes. Briefly, this process is single-threaded and takes about 30 minutes and during this time the matrix is populated with text characters. As the scenes are built up, each element in the matrix stays blank or has a colored character assigned to it. Periodically, effects are added such as random glitches. All elements are synchronized to the tempo of the score and transitions can be triggered from drum score midi files. For example, in parts, the background for each frame flashes to the kick and snare. I get into the detail of this below.

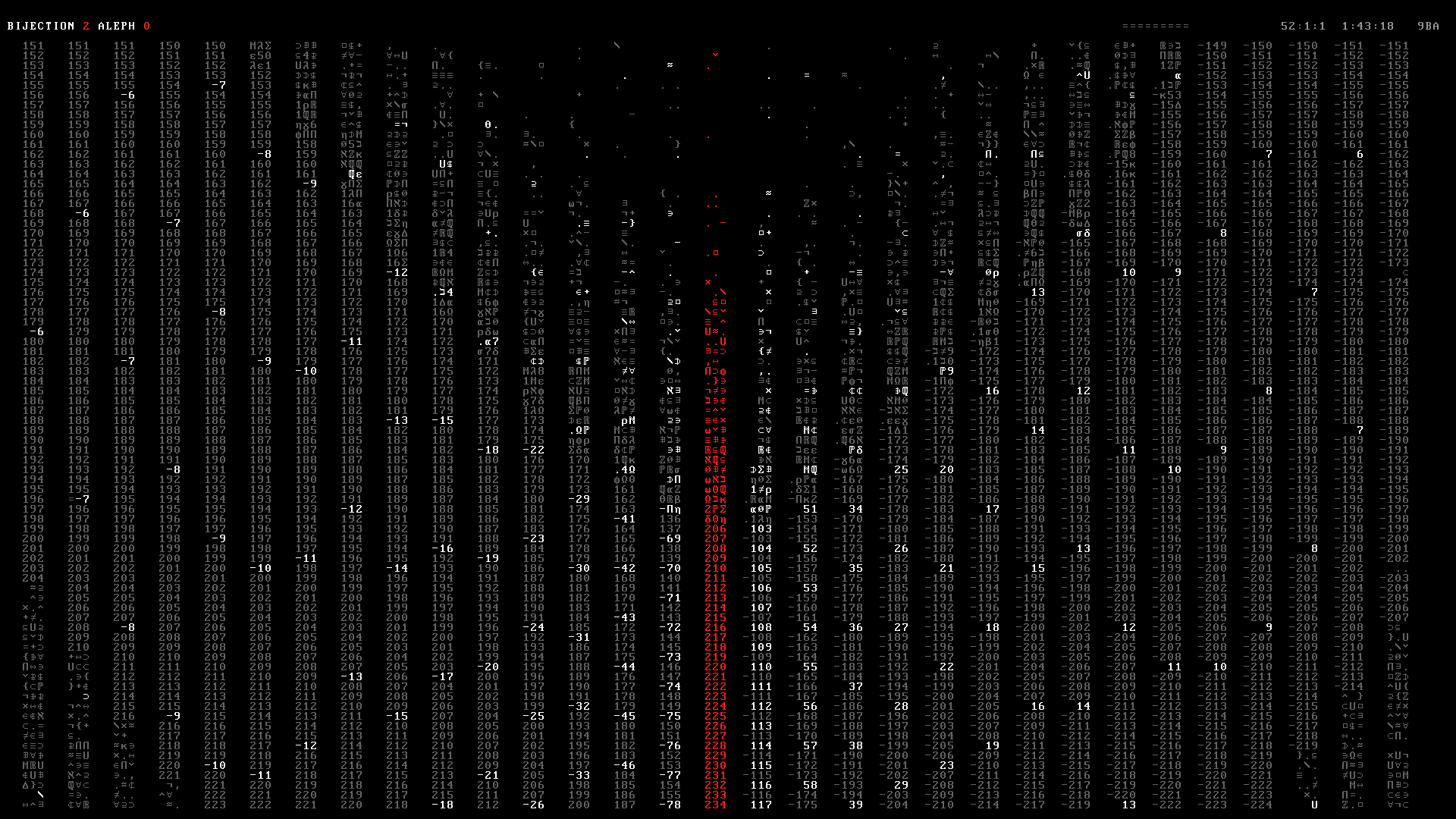

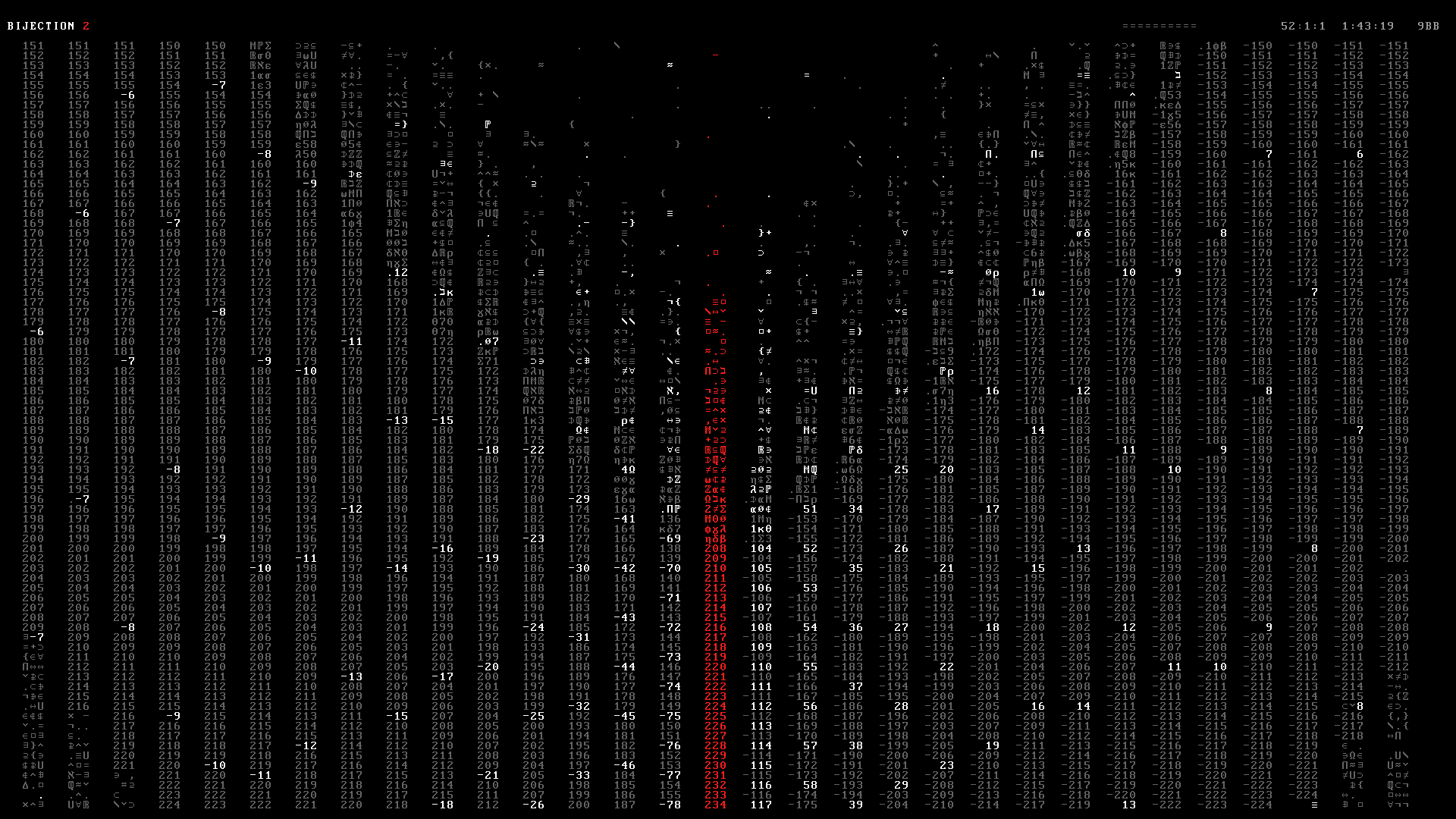

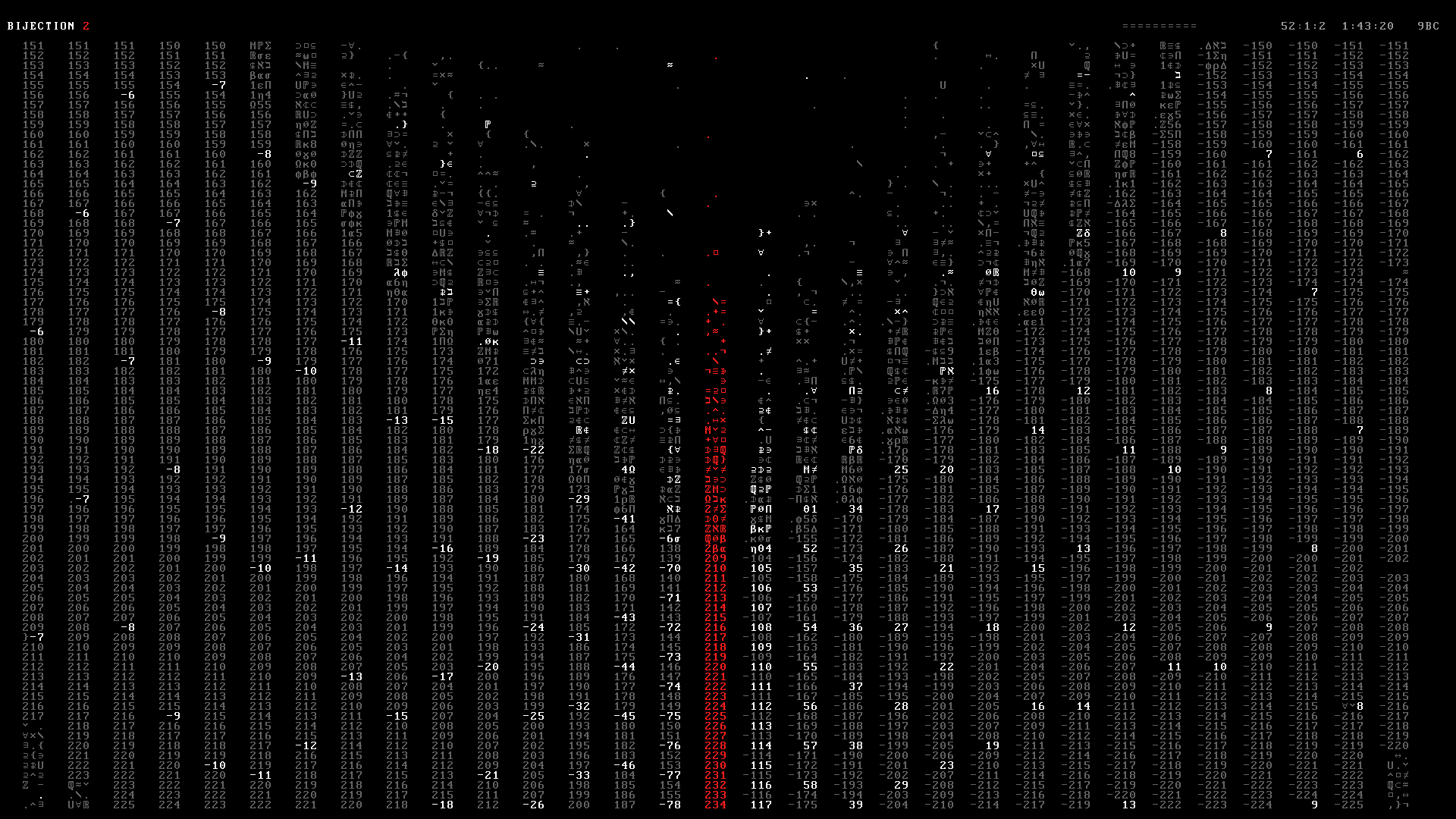

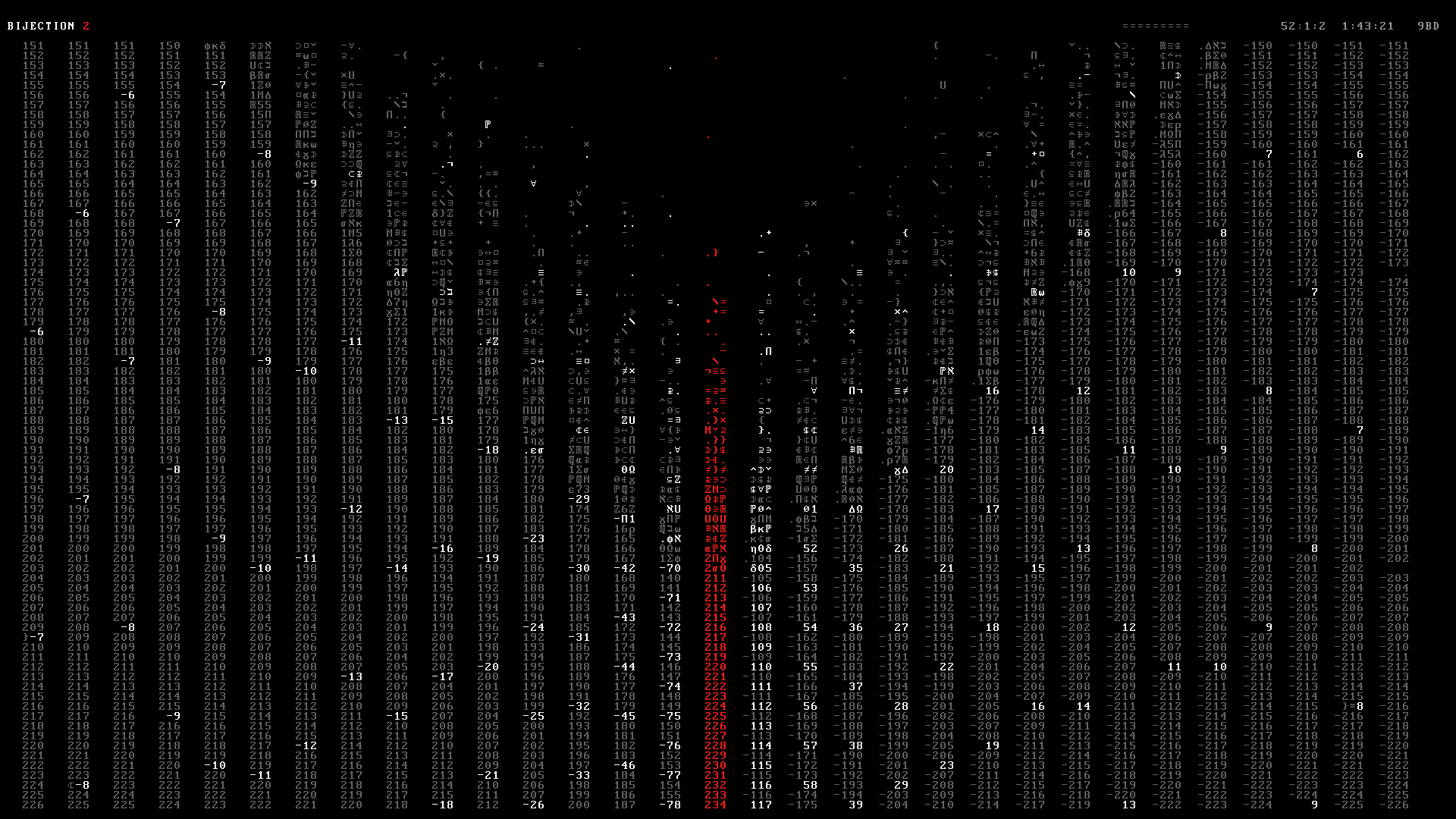

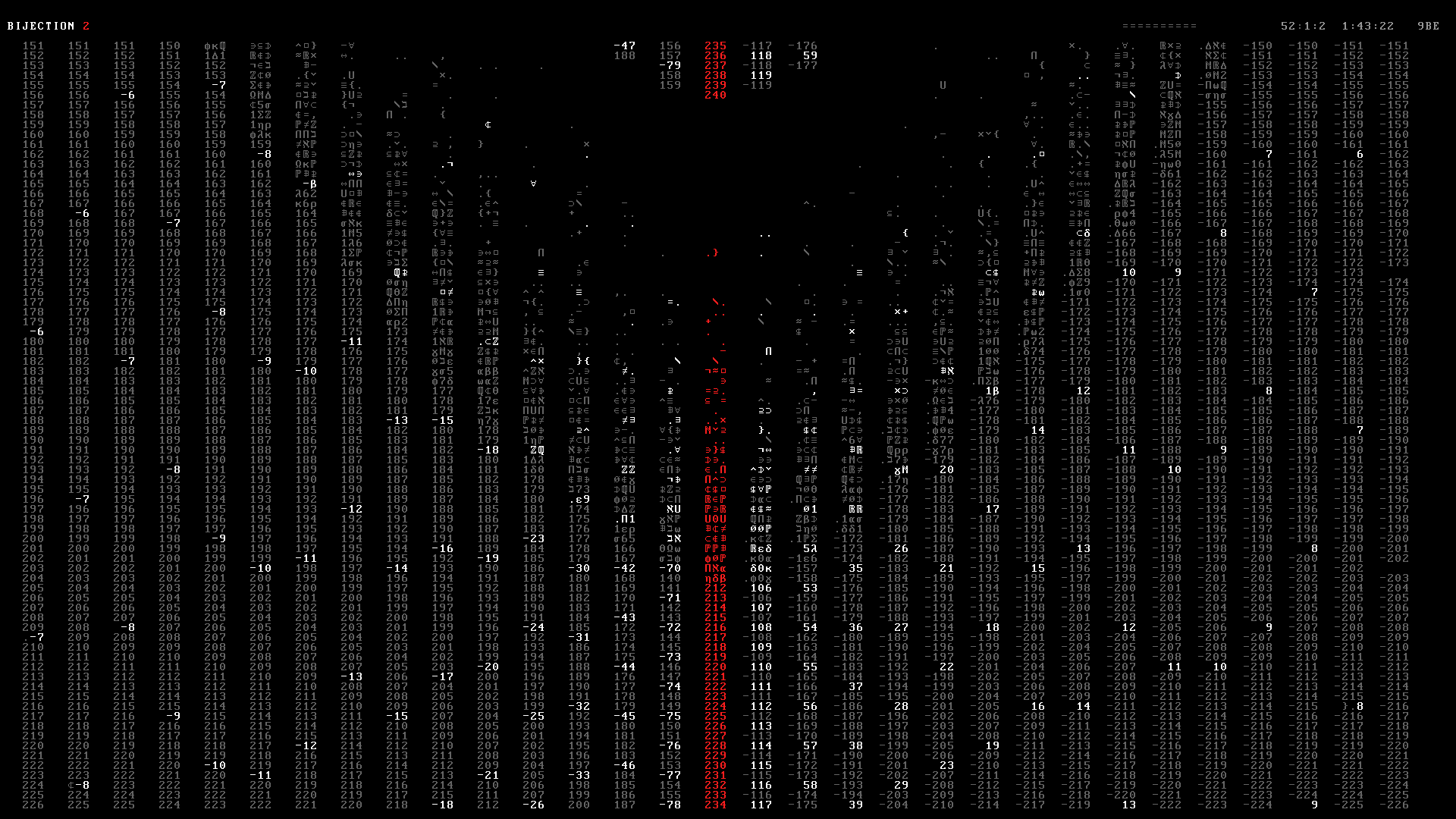

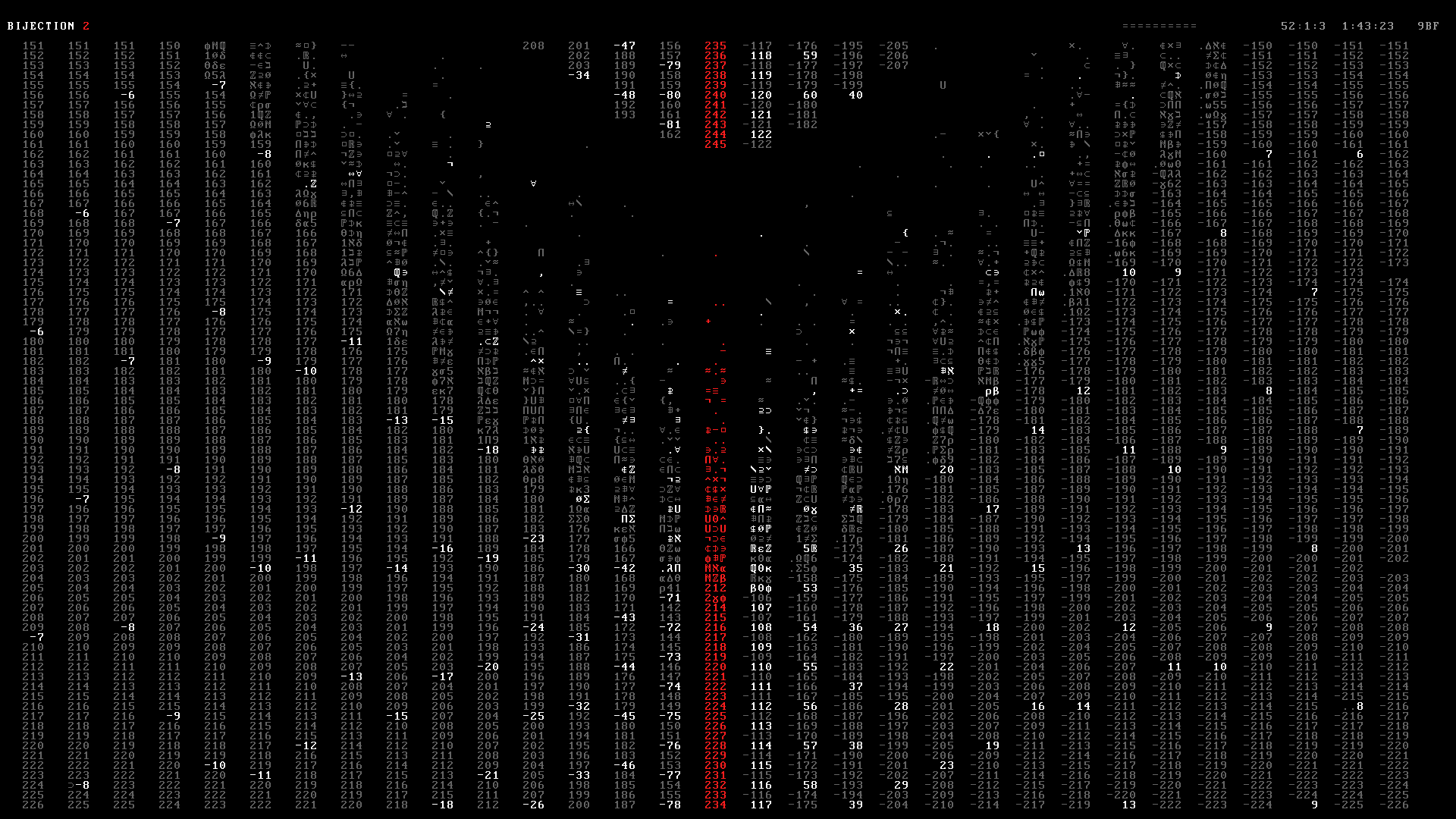

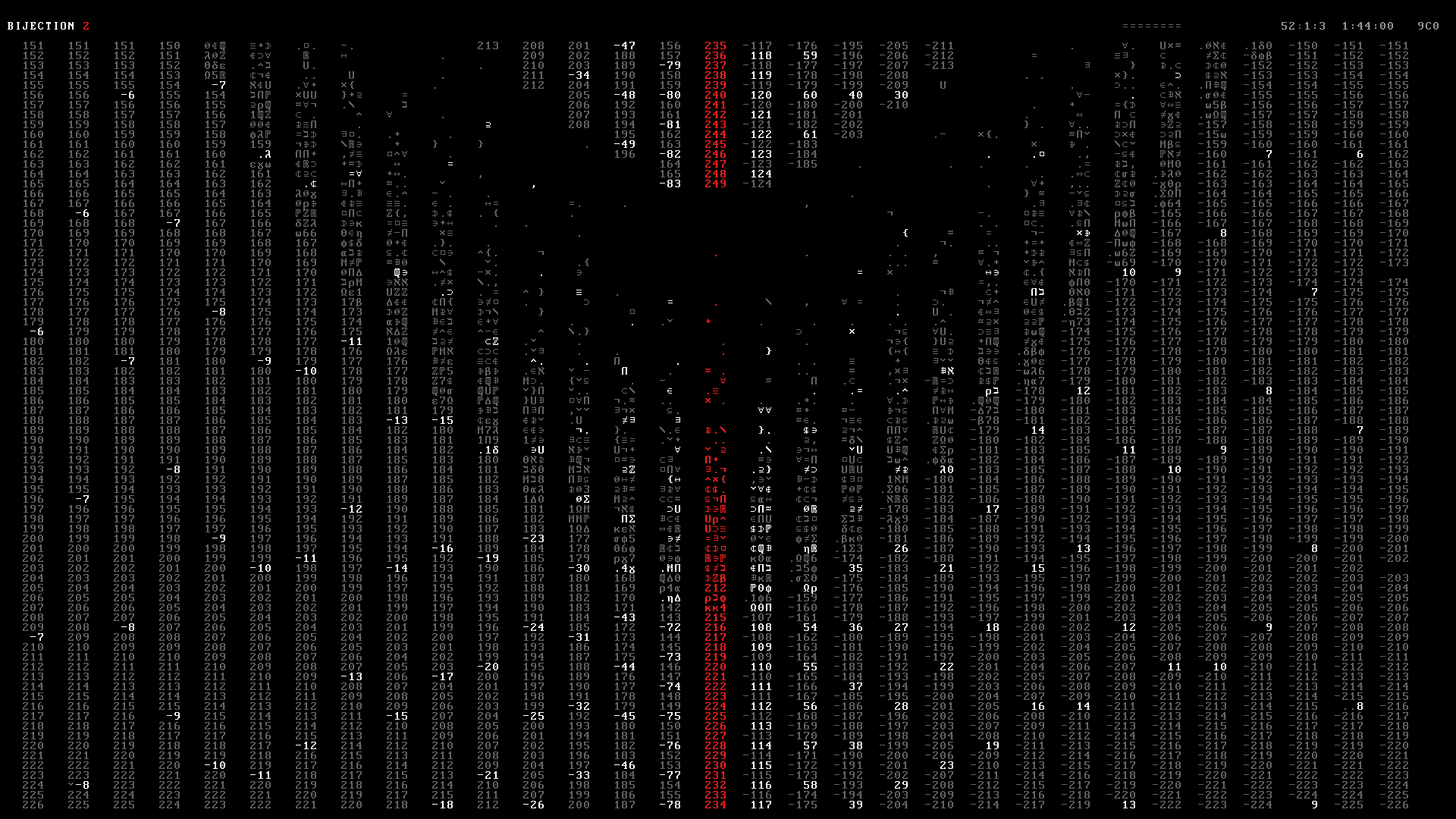

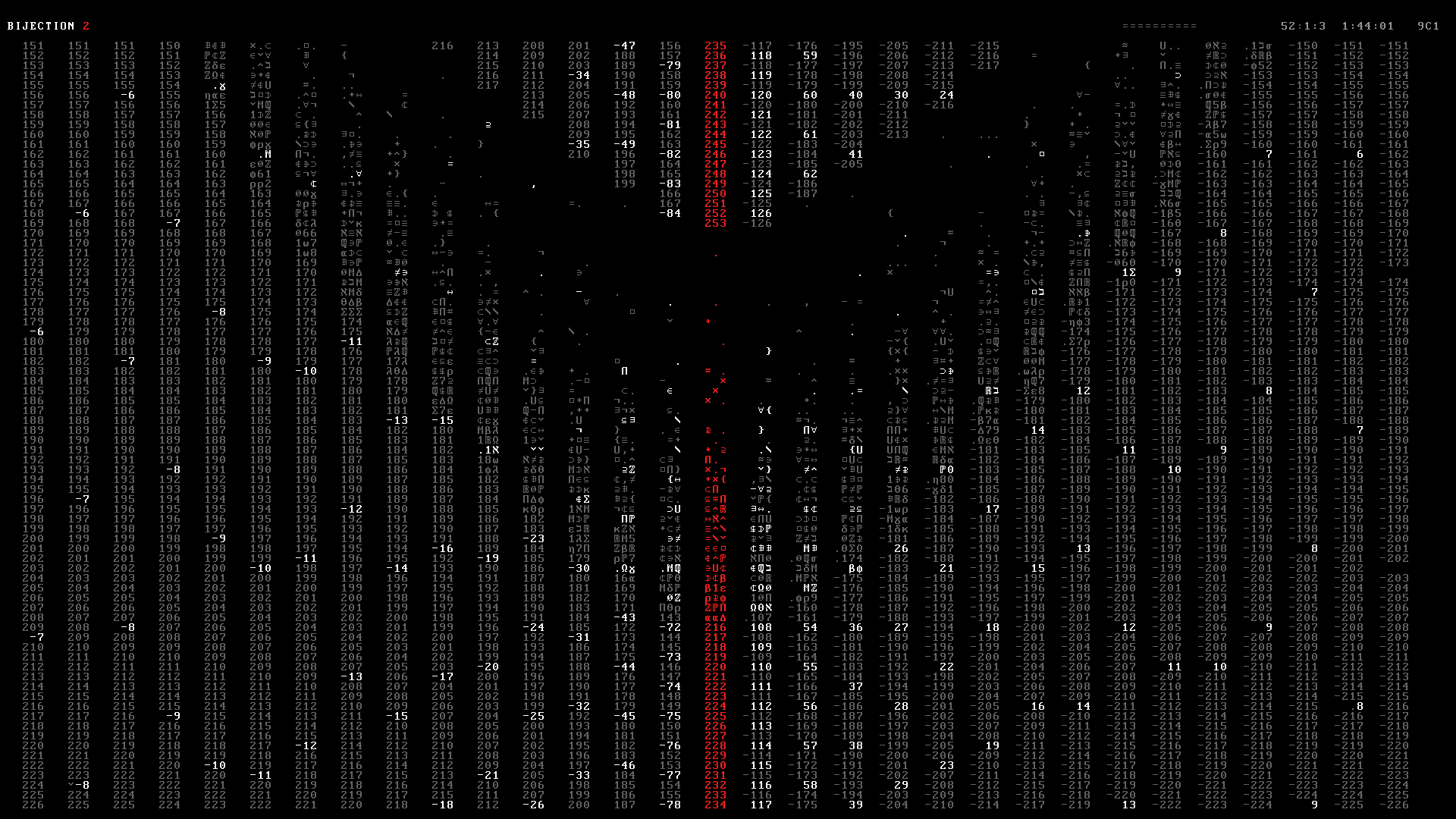

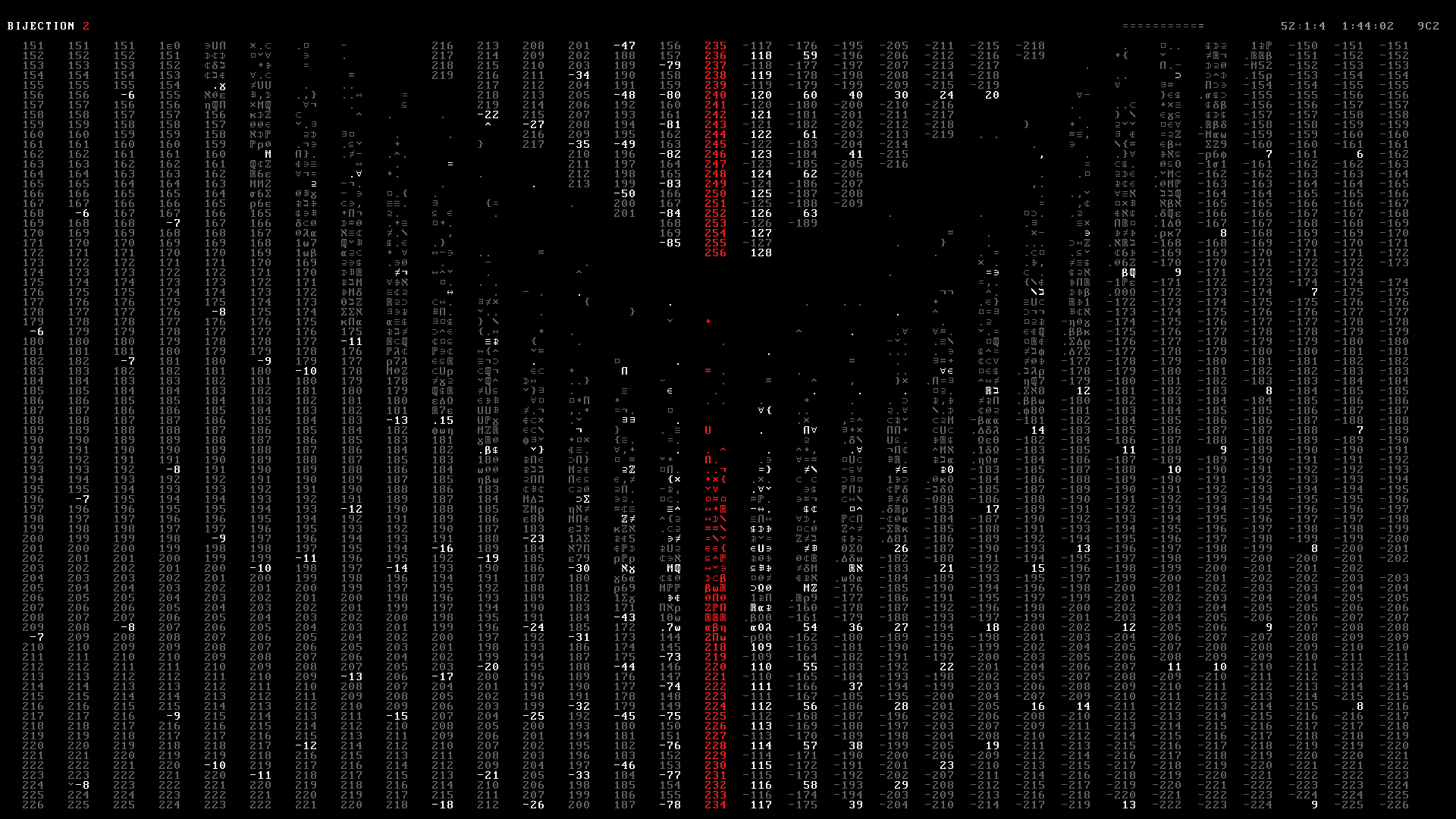

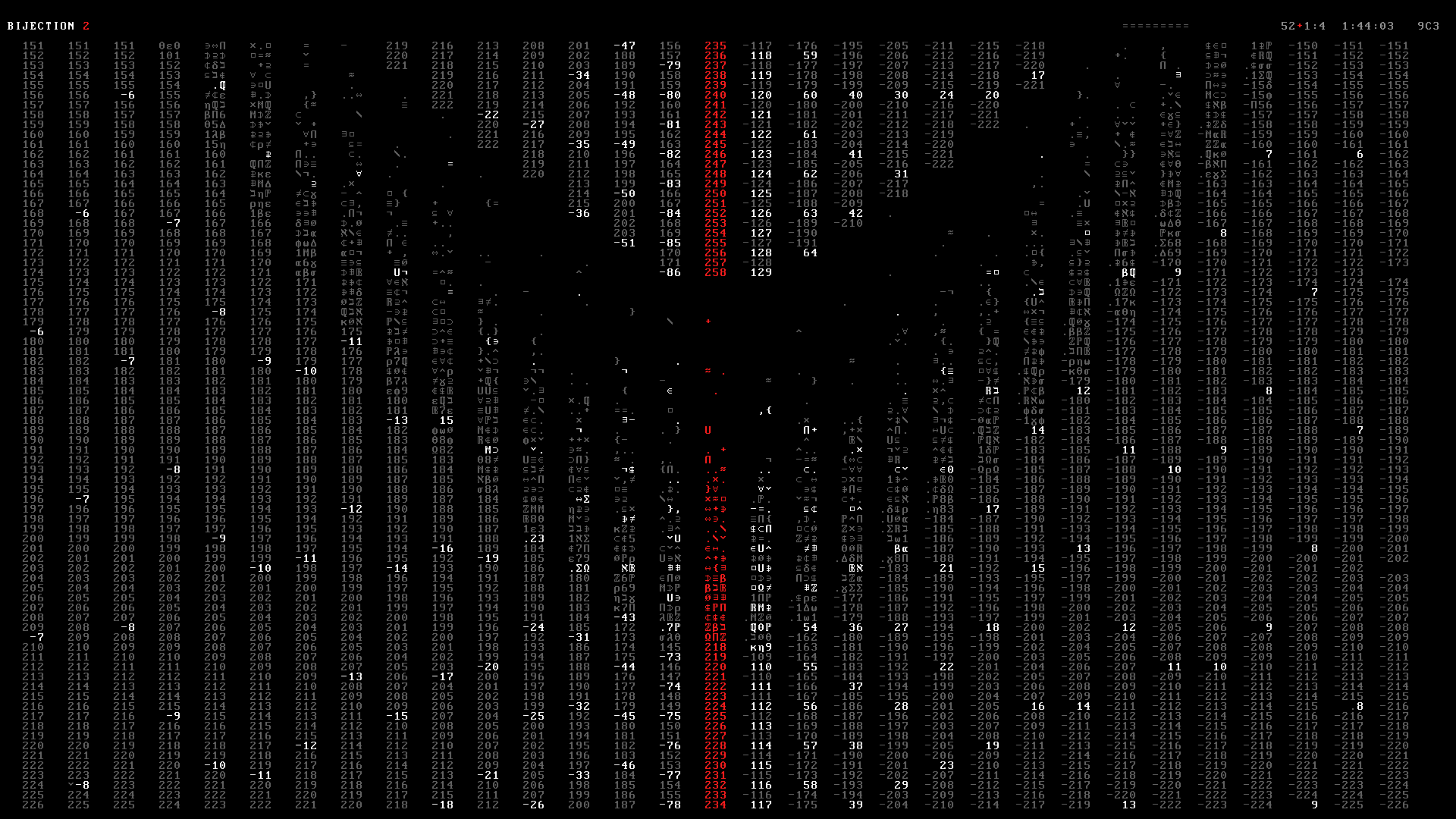

Once the matrix has been fully populated, each frame is output to a PNG file (e.g. 000000.png ... 009472.png). Below you can see 10 frames in the range 2490–2499 from the Bijection 2 section of the video at 1:42.

The frames were then stitched into a PNG movie in 24-bit RGB color space using ffmpeg.

ffmpeg -thread_queue_size 32 -r 24 -y -i "frames/aleph/%06d.png" $offset $seek -i wav/aleph.wav -pix_fmt rgb24 -avoid_negative_ts make_zero -shortest -r 24 -c:v png -f mov aleph.mov

The .mov file is then converted into DXV format used by the Resolume VJ software that Max Cooper uses in his shows to control the displays.

If you watch the video on YouTube, please know that the YouTube temporal and chroma compression greatly reduces the quality of the original 24-bit RGB master, which was used for the Barbican Hall performance. The YouTube compression bleaches out the vibrant red, which really pops out in the master version, and blurs frames during fast strobing, which neuters parts of the video that are designed to be overwhelming, such as the drop transition to 24 fps strobing during the natural number power set.

Here is Max's original direction for the video's narrative and art style.

I want to show ever growing lists of numbers, then split the lists somehow (left/right of screen) and show how you can pair subsets of Aleph 0 / integers with themselves, then show how Cantor's diagonal argument can be used to pair the fractions with the natural numbers, then show his other diagonal argument for proving the reals are greater than Aleph 0, then show the process (very roughly and with maximum artistic licence if necessary) of taking the power set of infinite sets to create larger infinities to get up to Aleph 1, Aleph 2, and maybe further if the system allows. In the end it should just be complete text/number chaos on screen along with the intense chaos of the audio.

We'd have to be very careful to avoid any Matrix reference visually! ... but that low-fi / command line style would be suitable I think.

In terms of animation, the technical requirements were relatively simple. Everything would be rendered with a fixed-width font with no anti-aliasing and the color palette would be very limited (e.g. black, grey, white and a red for emphasis). Nothing other than characters would be drawn (no lines, circles or other geometrical shapes) and there would be no gradients. Basically, very lo-fi and 8-bit.

Despite the fact that I've never made any kind of animation before, I thought that these requirements could be relatively easy to achieve. After all, if worse came to worst, I told myself that I could always generate the video frame-by-frame.

However, it quickly became obvious that traditional keyframe systems could not be easily used to tell our story. Tools like Adobe After effects rely on interpolation between keyframes. But in our video every frame is essentially a keyframe. This meant that any kind of interpolation between scene points would have to be programmed—while After Effects makes it easy to move things around on the screen, it requires scripting to generate content based on lists of numbers, set elements, and so on. And since I have no experience in After Effects, I thought it would be faster to code my own system than to learn After Effects' expression language to (possibly) later discover that what I wanted to do was either hard or practically impossible for me to achieve within our time frame (a month).

I knew that I was reasonably good at prototyping and generating custom visualizations, so it felt safer to create something from scratch.

The final version of the system, which is very much a prototype, is about 6,000 lines of Perl. The Aleph 2 video is built out from about 2,000 lines of plain-text configuration that defines scenes, timings and effects.

It took about a week to figure out how to design the system. As we were building out the video, I iterated between creating the story and creating the system to tell the story. This was iterative and felt very much like trying to simultaneously building and flying a plane.

At times, the entire process would crash down on me because some tiny tweak fundamentally changed how everything worked.

For example, one extremely nagging aspect of the code that I patched only half-way through the entire process had to do with how time was specified. From the start, I made use of measure:beat:16note notation, such as for scene starts and ends (e.g. 2:2 to 4:1 meant a scene started on measure 2 beat 2 and stopped at measure 4 beat 1).

This notation used 1-indexing (e.g. 1:1:1 is the first 16th note of the score). 0-indexing would have been unintuitive because musically one counts beats as 1, 2, 3, 4 and not 0, 1, 2, 3. Importantly, I wanted the way we referred to timings in conversation (e.g. on the "and of 2" of the 4th measure) to be directly reflected in the code.

All this made sense until I needed a notation to express duration. When I started using 1:1 to indicate a duration of 1 measure and 1 beat, I had to reconcile the difference between 1:1 as a point in time and as a duration—the former specified the beginning of the interval (e.g. frame=0) and the latter the end (frame=61). It also took me forever to decide whether the duration of 5 beats should be expressed as 1:2 (e.g. end is start of beat 2) or 1:1 (e.g. end is end of beat 1).

The fact that the video frame rate is 24 fps made things even more complicated. At this frame rate, there are 3.051 frames per 16th note. This meant that any duration of 1 frame (which happens during fast strobing) couldn't be expressed by the integer notation of measure:note:16note. I didn't want to have to write 0:0:0.3278, which seemed a tedious way of saying "1 frame". Furthermore, because frames didn't neatly match up to 16th note boundaries, quite a lot of time is spent checking that timing definitions don't suffer from rounding issues.

In the final video, you'll see the measure timer in the upper right corner. The : between the measure and beat flashes as a red + on the beat (118 bpm). Next to the measure timestamp you'll see a min:sec:frame timestamp and hexadecimal readout of the frame number.

The video is composed of a series of scenes, each with a specific start and end time, as well as other parameters. The start and end of scenes was typically informed by changes in the music, such as breaks.

A scene is a short clip of the video in which similar content is being shown. For example, at the start where we count the natural numbers, each filling scene is a scene. Below are the timing definitions for the first three screens of natural counts.

<scene> name = nc1 timing = count1 ease = 0.6 ease_step = 0 start = 4m # starts at measure 4 len = 13m # lasts for 13 measures k_start = 1 # start counting at 1 </scene> <scene> name = nc2 timing = nc_timing ease = 0.8 start = 17m # starts at measure 17 len = 6m # lasts for 6 measures </scene> <scene> name = nc3 timing = nc_timing ease = 0.7 start = 23m len = 2m </scene>

For each scene, I generate the numbers that will appear in the scene and then distribute their time of appearance across the duration of the scene, subject to some easing.

Some scenes are more complicated and consist of several screens worth of content. Once the screen is filled, it's cleared and then more content is shown. For example, in the bijection section the natural numbers down the center take 2 measures to fill at first but next time the fill takes less time at 2 * 0.8 measures. We keep multiplying the fill time by 0.8 until we reach a minimum of 6 frames.

<timing bijection-n> time_to_fill_start = 2m time_to_fill_mult = 0.8 time_to_fill_end = 6 time_pause_start = 2 time_blank_start = 2 </timing>

A lot of the effects appear as set theory characters that display on the screen briefly in sync with the music.

Through the video, there are a lot of transitions and fades that look like text is decaying.

For example, a 9 might decay into an 8 then a 7 and all the way down to 0 and then be wiped from the screen. At other times, a 9 might decay by randomly selecting the next character from a set of lists, such as

["@","#","\$","%","&","*"]

["[","]","{","}","<",">"]

[";",":","-","+","="]

[".",","]

So a 9 might decay as 9%]+ across some number of frames, depending on whether the decay is fast or slow.

The timing and speed of this decay process took a long time to tweak. There are many parts in the video where the decay speeds up, or slows down and many times the decay is triggered by percussion. For example, the snare can be made to initiate some characters on the screen to rapidly decay.

The decay is processed as an effect after the basic scene is laid out. During this process, we can look ahead in time to know what is coming at a given screen position and terminate the decay if, for example, a new character appears. Alternatively, the decay can bleed into the next scene, if the rate at which it happens is slow and crosses scene boundaries.

Percussion, particularly the snare, are used to trigger characters to flash and decay.

For example, between measures 52 and 65, we flash one of the characters from the set braces = {, }. The braces appear only where characters are already on the screen (writeonchar = 1) with a probability of flash_rate = 0.1 at any given character position.

Immediately upon being drawn, these braces decay (see below) into the list of characters defined by decay_set = setrapid.

<scene>

name = snare

action = midi_trigger

position = full_screen

midi_sets = snare

midi_action = cascade_red_star_subtle

start = 52m

end = 65m

</scene>

<midi_action cascade_red_braces_subtle>

action = char_flash(flash_char_set=>"braces",writeonchar=>1,

rgb=>"red",flash_rate=>0.1,

decay_rate=>1,decay_delay=>0,

decay_punch=>0,decay_set=>"setrapid")

</midi_action>

Because the drum component of the video was a live studio recording, I generated a midi file for the percussions by demarcating the wave form of their sound. This transcription process took a while and care had to be taken to identify the instruments correctly (e.g. snare vs rim shot) to have a list of events that matched the volume.

Once I had a list of all the percussion events, I could create effects that would appear to coincide with these times. For example, every time a snare was hit an effect could trigger 50% of the time.

By far, the bulk of the time in making this video was spent on tweaking the density, duration and decay of effects. Towards the end, between measures 160 and 167, when powersets of naturals and reals are being shown many effects are stacked. For example, the kick drum is used to decay and fade the natural powersets on the left of the screen while the snare does the same for the real powersets on the right.

In modern animation systems you would have an interface that could help in defining timings and effect curves, because my system is controlled by plain text inputs, of the kind that you see above, each set of effects was lovingly tuned by hand by adjusting measure starts and ends and rates in the configuration file.

These configuration files got to be quite long. For example, here are the

scene definitions (storyboard.v3.conf),

effect scenes (effects.conf) and the

midi and decay timings (aleph.conf).

I don't expect that these will make total sense to anyone and post them here to give you more of a flavour of what is happening under the hood.

Nasa to send our human genome discs to the Moon

We'd like to say a ‘cosmic hello’: mathematics, culture, palaeontology, art and science, and ... human genomes.

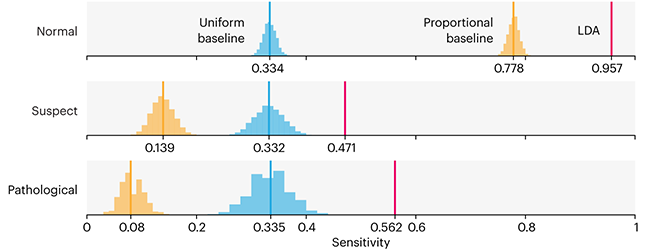

Comparing classifier performance with baselines

All animals are equal, but some animals are more equal than others. —George Orwell

This month, we will illustrate the importance of establishing a baseline performance level.

Baselines are typically generated independently for each dataset using very simple models. Their role is to set the minimum level of acceptable performance and help with comparing relative improvements in performance of other models.

Unfortunately, baselines are often overlooked and, in the presence of a class imbalance5, must be established with care.

Megahed, F.M, Chen, Y-J., Jones-Farmer, A., Rigdon, S.E., Krzywinski, M. & Altman, N. (2024) Points of significance: Comparing classifier performance with baselines. Nat. Methods 20.

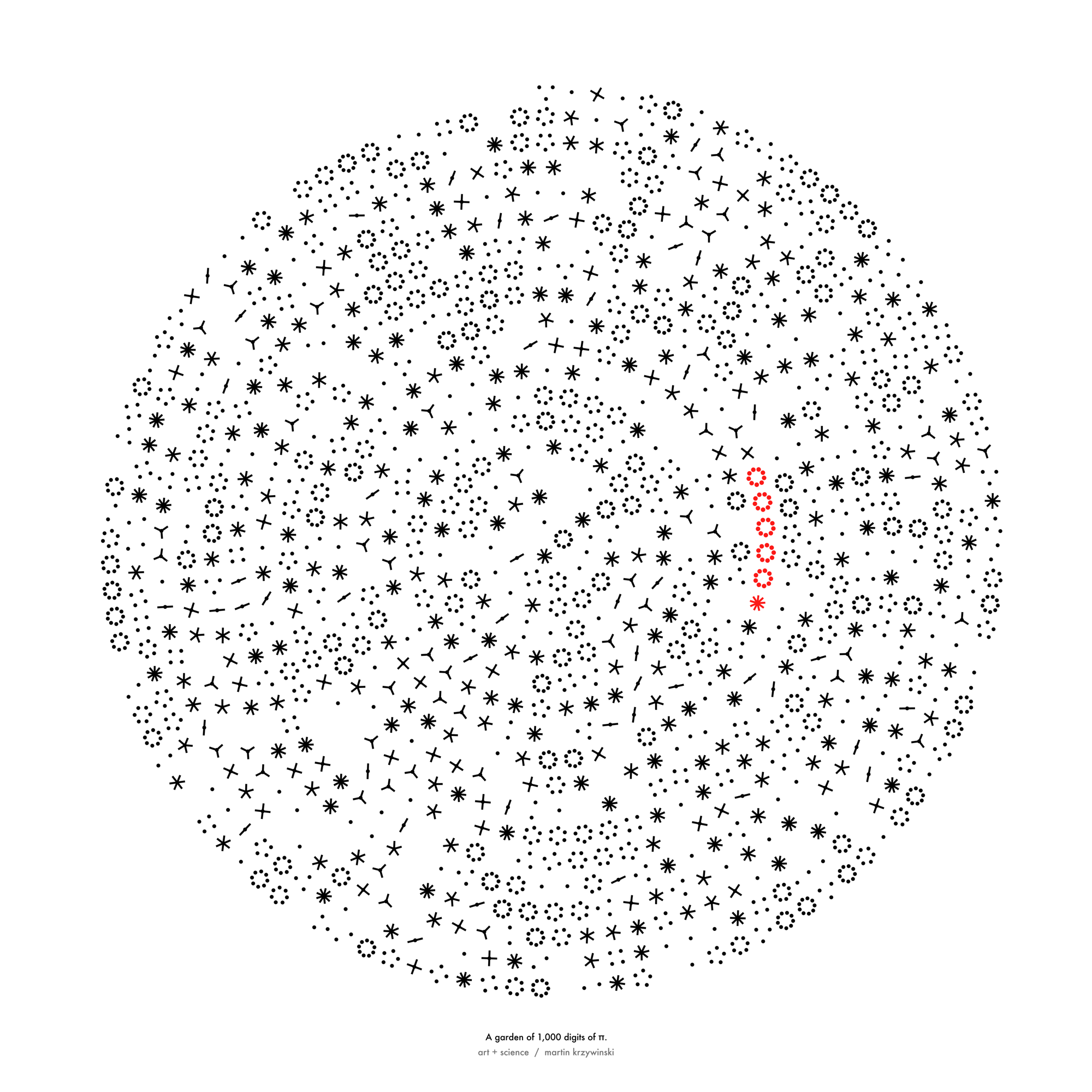

Happy 2024 π Day—

sunflowers ho!

Celebrate π Day (March 14th) and dig into the digit garden. Let's grow something.

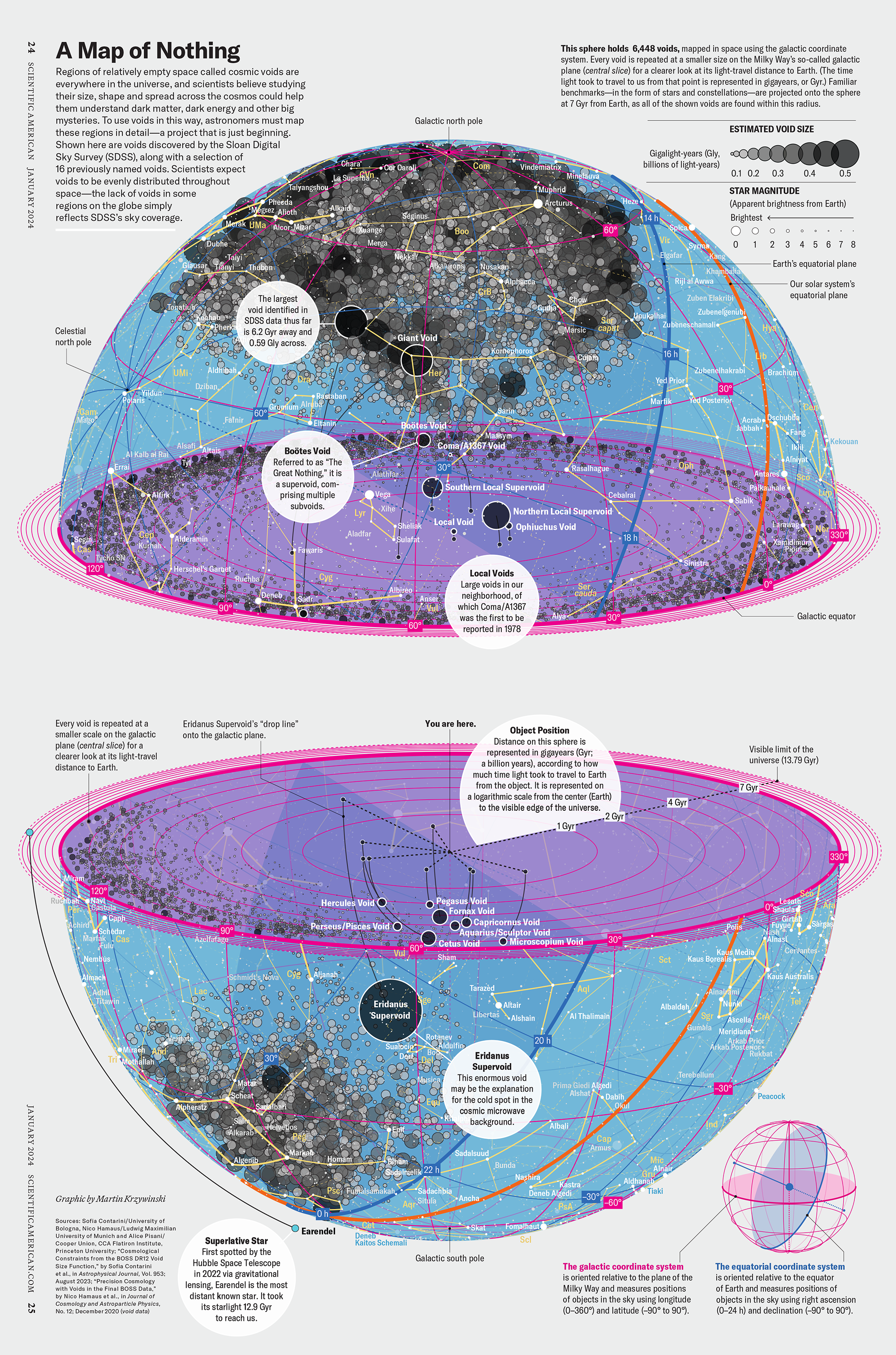

How Analyzing Cosmic Nothing Might Explain Everything

Huge empty areas of the universe called voids could help solve the greatest mysteries in the cosmos.

My graphic accompanying How Analyzing Cosmic Nothing Might Explain Everything in the January 2024 issue of Scientific American depicts the entire Universe in a two-page spread — full of nothing.

The graphic uses the latest data from SDSS 12 and is an update to my Superclusters and Voids poster.

Michael Lemonick (editor) explains on the graphic:

“Regions of relatively empty space called cosmic voids are everywhere in the universe, and scientists believe studying their size, shape and spread across the cosmos could help them understand dark matter, dark energy and other big mysteries.

To use voids in this way, astronomers must map these regions in detail—a project that is just beginning.

Shown here are voids discovered by the Sloan Digital Sky Survey (SDSS), along with a selection of 16 previously named voids. Scientists expect voids to be evenly distributed throughout space—the lack of voids in some regions on the globe simply reflects SDSS’s sky coverage.”

voids

Sofia Contarini, Alice Pisani, Nico Hamaus, Federico Marulli Lauro Moscardini & Marco Baldi (2023) Cosmological Constraints from the BOSS DR12 Void Size Function Astrophysical Journal 953:46.

Nico Hamaus, Alice Pisani, Jin-Ah Choi, Guilhem Lavaux, Benjamin D. Wandelt & Jochen Weller (2020) Journal of Cosmology and Astroparticle Physics 2020:023.

Sloan Digital Sky Survey Data Release 12

Alan MacRobert (Sky & Telescope), Paulina Rowicka/Martin Krzywinski (revisions & Microscopium)

Hoffleit & Warren Jr. (1991) The Bright Star Catalog, 5th Revised Edition (Preliminary Version).

H0 = 67.4 km/(Mpc·s), Ωm = 0.315, Ωv = 0.685. Planck collaboration Planck 2018 results. VI. Cosmological parameters (2018).

constellation figures

stars

cosmology

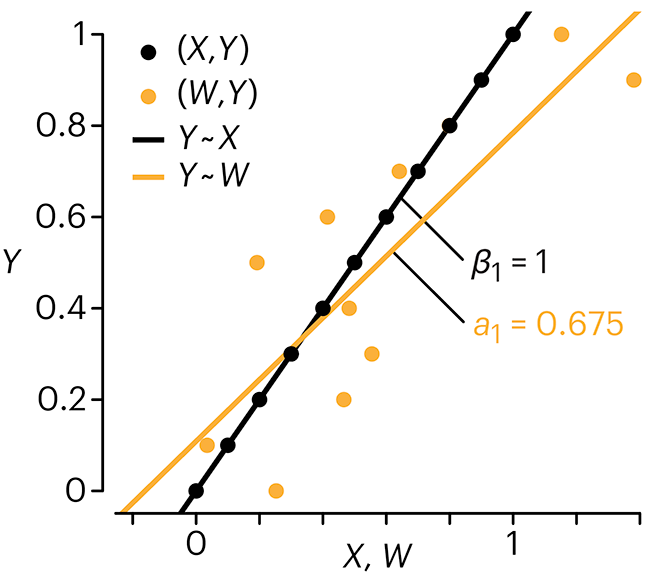

Error in predictor variables

It is the mark of an educated mind to rest satisfied with the degree of precision that the nature of the subject admits and not to seek exactness where only an approximation is possible. —Aristotle

In regression, the predictors are (typically) assumed to have known values that are measured without error.

Practically, however, predictors are often measured with error. This has a profound (but predictable) effect on the estimates of relationships among variables – the so-called “error in variables” problem.

Error in measuring the predictors is often ignored. In this column, we discuss when ignoring this error is harmless and when it can lead to large bias that can leads us to miss important effects.

Altman, N. & Krzywinski, M. (2024) Points of significance: Error in predictor variables. Nat. Methods 20.

Background reading

Altman, N. & Krzywinski, M. (2015) Points of significance: Simple linear regression. Nat. Methods 12:999–1000.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of significance: Logistic regression. Nat. Methods 13:541–542 (2016).

Das, K., Krzywinski, M. & Altman, N. (2019) Points of significance: Quantile regression. Nat. Methods 16:451–452.

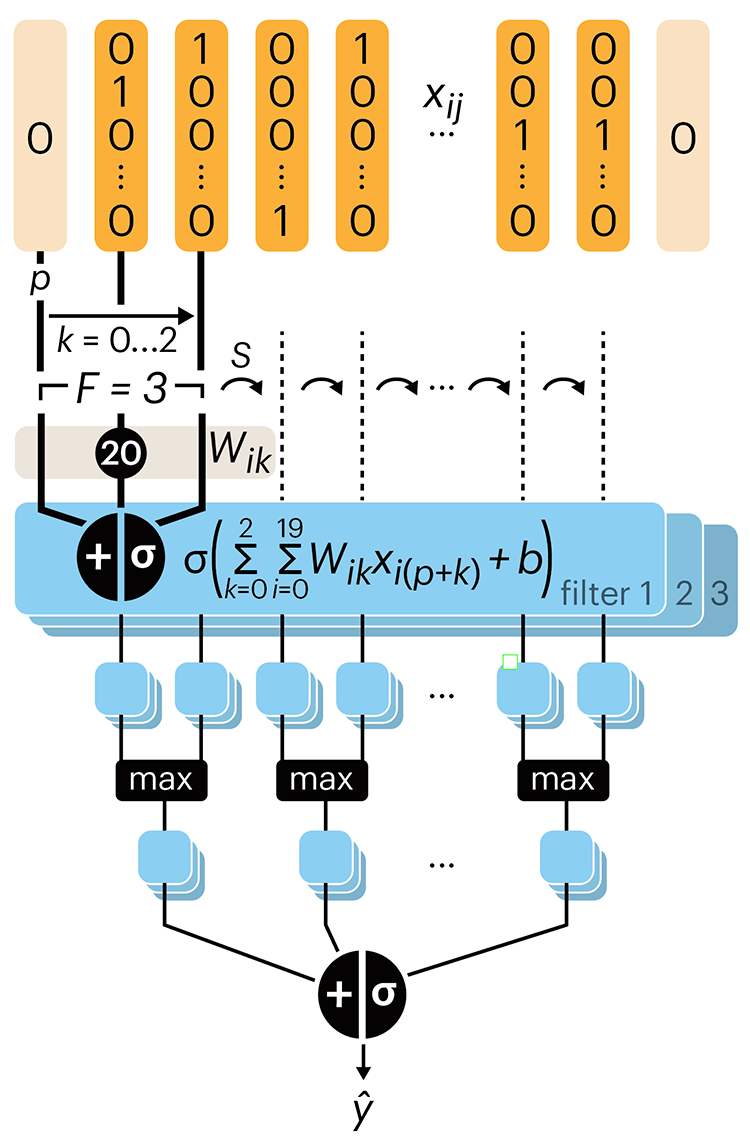

Convolutional neural networks

Nature uses only the longest threads to weave her patterns, so that each small piece of her fabric reveals the organization of the entire tapestry. – Richard Feynman

Following up on our Neural network primer column, this month we explore a different kind of network architecture: a convolutional network.

The convolutional network replaces the hidden layer of a fully connected network (FCN) with one or more filters (a kind of neuron that looks at the input within a narrow window).

Even through convolutional networks have far fewer neurons that an FCN, they can perform substantially better for certain kinds of problems, such as sequence motif detection.

Derry, A., Krzywinski, M & Altman, N. (2023) Points of significance: Convolutional neural networks. Nature Methods 20:1269–1270.

Background reading

Derry, A., Krzywinski, M. & Altman, N. (2023) Points of significance: Neural network primer. Nature Methods 20:165–167.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of significance: Logistic regression. Nature Methods 13:541–542.