`\pi` Approximation Day Art Posters

The never-repeating digits of `\pi` can be approximated by 22/7 = 3.142857 to within 0.04%. These pages artistically and mathematically explore rational approximations to `\pi`. This 22/7 ratio is celebrated each year on July 22nd. If you like hand waving or back-of-envelope mathematics, this day is for you: `\pi` approximation day!

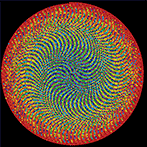

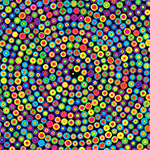

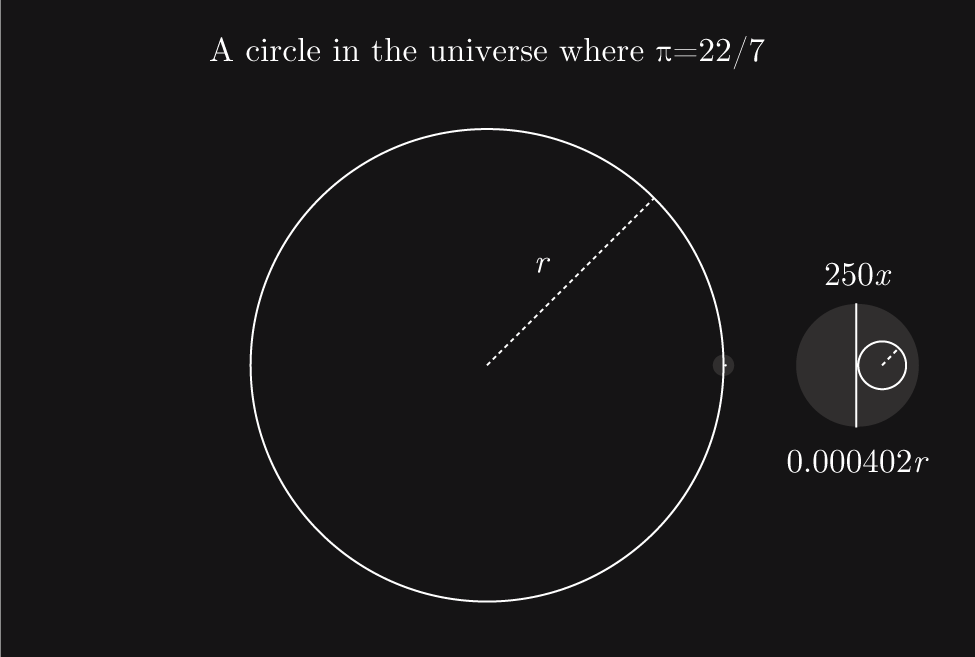

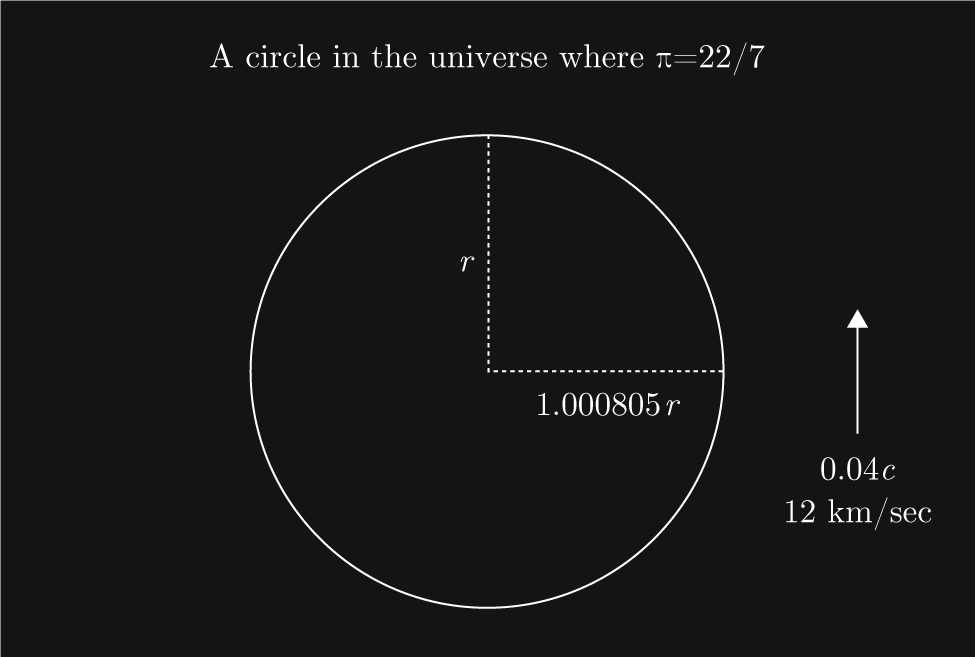

What would circles look like if `\pi`=22/7?

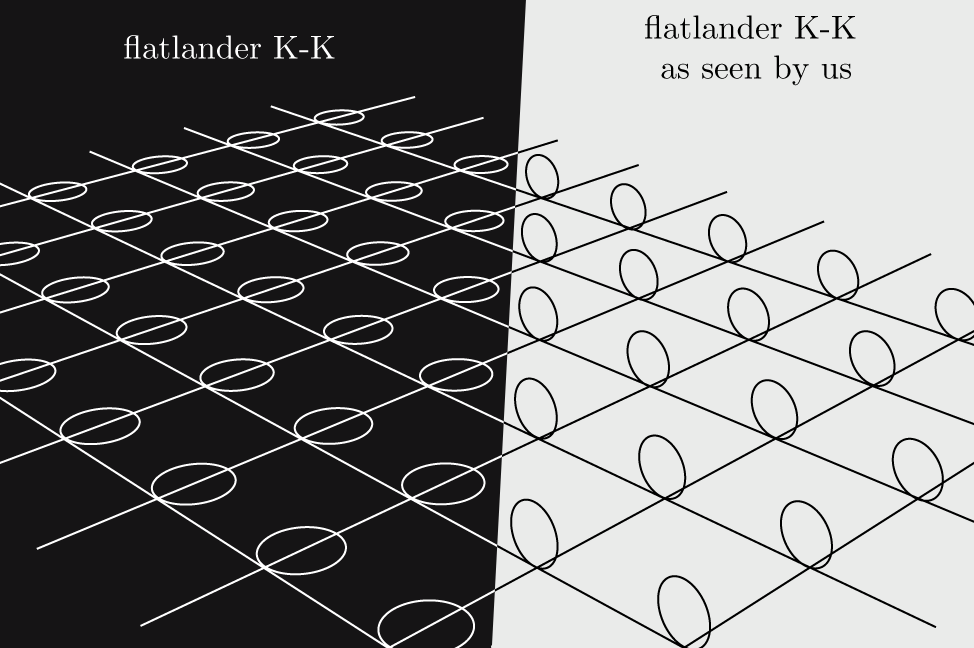

Tiny loop, Folded dimensions and solidland

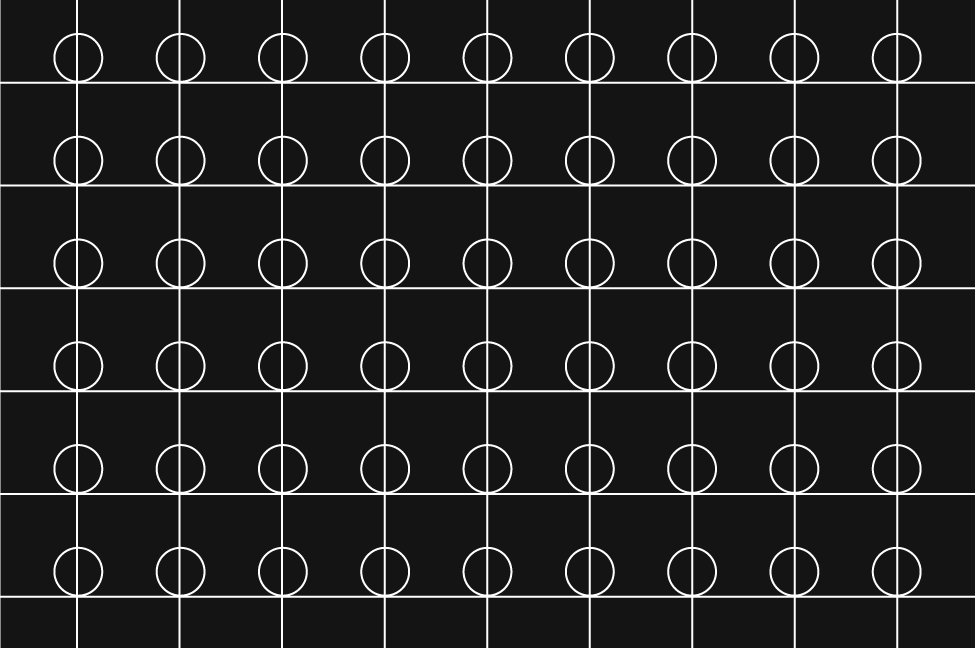

Imagine that the circle had a tiny loop at one of its points. The circumference of this loop would be added to the circumference of the circle, but the loop would be so small that we would never notice it.

This is reminiscent of how string theories describe higher dimensions—as tiny loops at each point in space, except in my example the loop is only at one point.

This idea originated with Klein, who explained the fourth dimension as a curled up circle of a very small radius. Another way in which this curling-up is used is to say that the fifth dimension is a curled up Planck length, as explained in this Imagining 10 Dimensions video.

flatlanders and solidlanders

If this idea is difficult to wrap your head around, you're not alone. We cannot think of additional dimensions in the regular spatial sence since we have no means of experiencing such phenomena. We can however imagine how flatlanders might explain the 3rd dimension, since we can perceive it. They would draw the curled up circles in their plane because they would not have the experience of drawing with perspective mimicking our 3rd dimension.

We would draw their explanation as shown on the right in the figure above, borrowing from our concept of the 3rd spatial dimension. Now imagine showing our explanation to a flatlander. They would not see the same thing as you—the circles would not intuitively imply the higher dimension to them.

This is analogous to why we cannot draw folded up dimensions. We are merely solidlanders—flatlanders in 3d space. Creatures that can perceive more spatial dimensions would use us as examples of diminished perceptual ability.

relativistic speeds, frames of reference and length contraction

Another way to imagine how a circle might look is a little more realistic. The theory of special relativity tells us that when we travel at speed relative to another object the dimensions of that object appear contracted to us in the direction of motion.

This contraction is always present, but essentially imperceptible unless we're travelling fast enough. For example, in order for a 1 meter object to appear contracted by the length of a hydrogen molecule (0.3 nm) we would have to be travelling at 7.3 km/s (Wolfram Alpha calculation)!

How fast would we have to be going to compress the circle sufficiently so that its circumference and radius ratio embody the `22/7` approximation of `\pi`? Pretty fast, it turns out. If we travel at just over 12,000 km/sec (0.04 times the speed of light, Wolfram Alpha calculation), the circle will compress as shown in the figure above, and the ratio of its circumference to the radius along direction of motion will make `\pi` appear to be `22/7`.

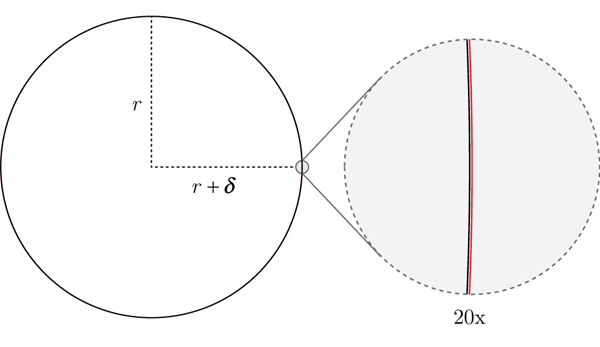

This compression in length would be barely perceptible to us. Below are both circles, shown overlapping, with `delta` being the extra length in radius required.

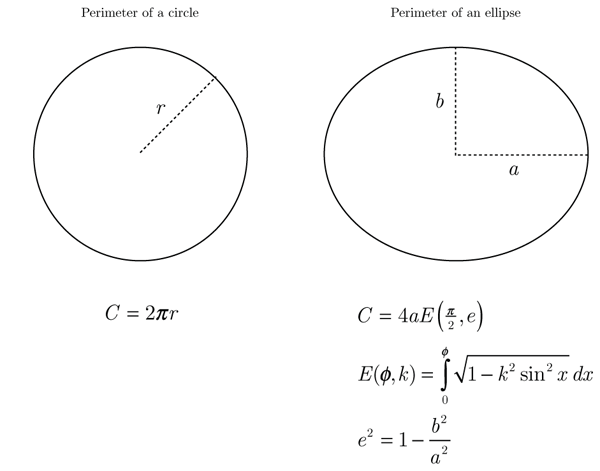

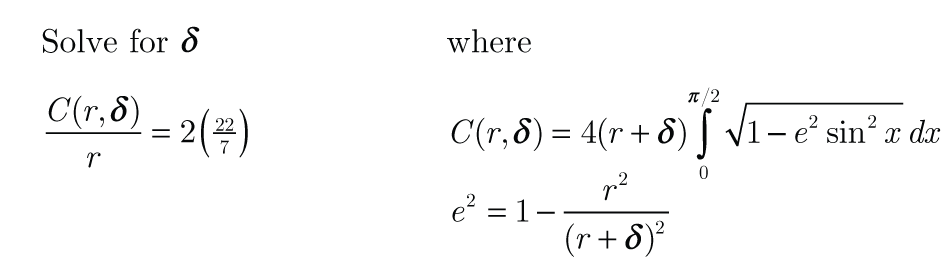

The value of `\delta`, which is 0.0008049179155 (if `r = 1`), can be calculated by considering the perimeter of an ellipse. The fact that `\delta` is small shouldn't be surprising since `22/7` is an excellent approximation of `\pi`, good to 0.04%.

Calculating the parameter of an ellipse is more complicated than calculating it for a circle because it uses something called an elliptic integral. This integral has no analytical solution and requires numerical approximation. Luckily, we have computers.

We can use the expression shown above for the perimeter of the ellipse to determine how much the circle needs to be deformed. Let's write `a = r + \delta` (original radius with slight deformation `\delta`) and `b=r`. Since `22/7 > \pi` we know that `\delta > 0`.

It remains to solve the equation below for a value of `\delta` that will yield a ratio of circumference to `r` of `2 \times 22/7`.

To make things simpler, let set `r=1`. Solving the equation numerically, I find $$\delta = 0.0008049179155$$

You can verify this solution at Wolfram Alpha.

the meaning of full-circle

After all this, we come full-circle to the meaning of full-circle.

You might ask why I didn't change the definition of `\pi` to `22/7` in the upper limit of the integral. After all, why not make the approximation exercise more faithful to the approximation?

It turns out that if I did that I would get `\delta=0`, which brings us back to the original circle. How is this possible?

Technically, this is because the integral returns the upper limit as its answer if the eccentricity is zero (i.e., `E(x,0)=x`).

Intuitively, this is because changing the upper limit of the integral actually redefines the angle of a full revolution. Now, full-circle isn't `2 \pi` radians, but `2 \times 22/7`. Given that the ratio of the circumference of a circle to its radius is exactly the size, in radians, of a full revolution, we don't need to change the shape of the circle if we're willing to change what a full revolution means.

Nasa to send our human genome discs to the Moon

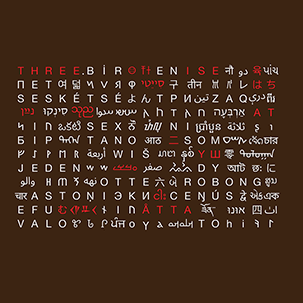

We'd like to say a ‘cosmic hello’: mathematics, culture, palaeontology, art and science, and ... human genomes.

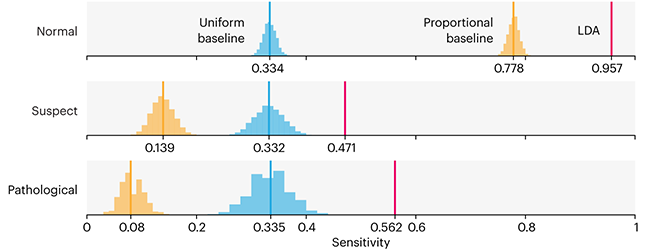

Comparing classifier performance with baselines

All animals are equal, but some animals are more equal than others. —George Orwell

This month, we will illustrate the importance of establishing a baseline performance level.

Baselines are typically generated independently for each dataset using very simple models. Their role is to set the minimum level of acceptable performance and help with comparing relative improvements in performance of other models.

Unfortunately, baselines are often overlooked and, in the presence of a class imbalance5, must be established with care.

Megahed, F.M, Chen, Y-J., Jones-Farmer, A., Rigdon, S.E., Krzywinski, M. & Altman, N. (2024) Points of significance: Comparing classifier performance with baselines. Nat. Methods 20.

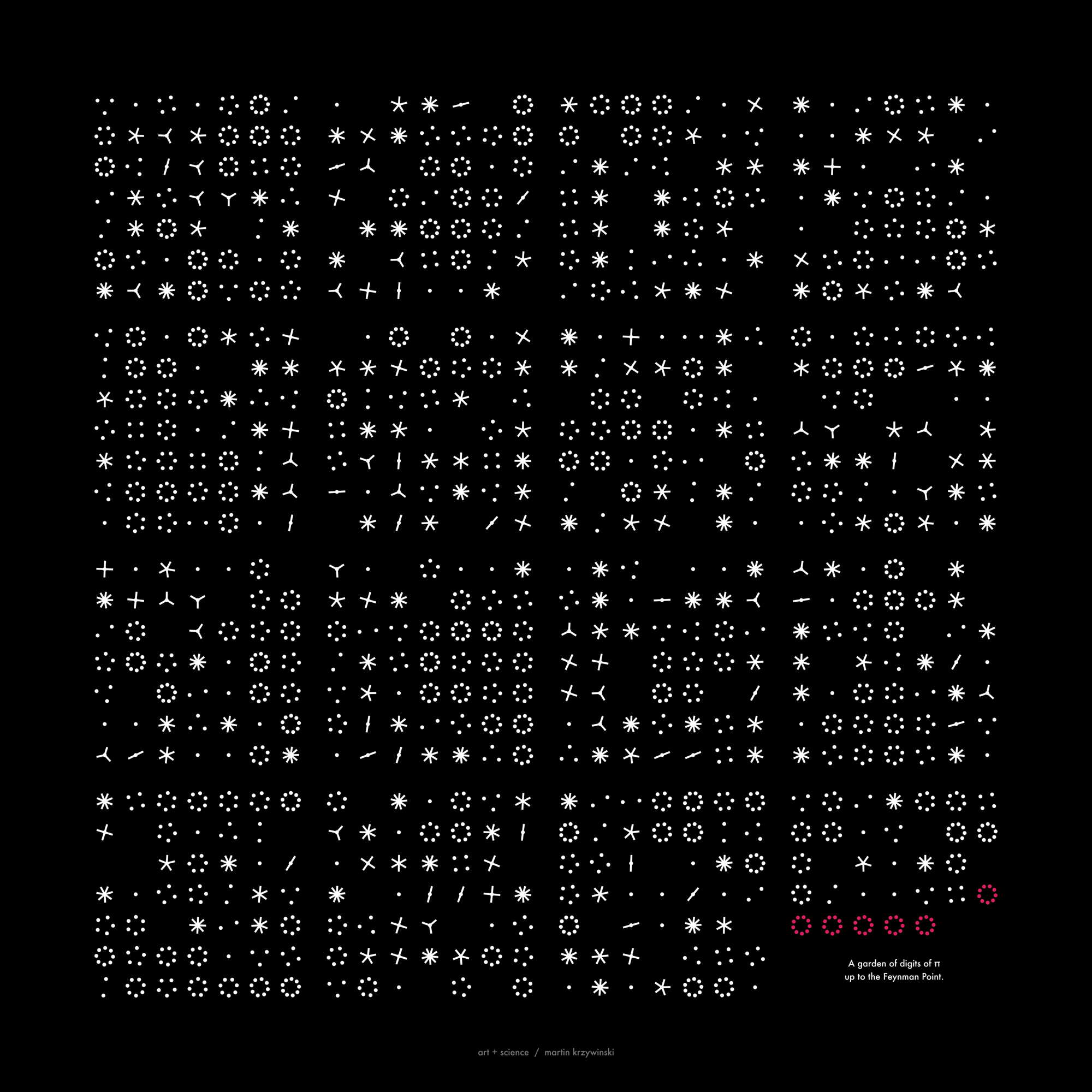

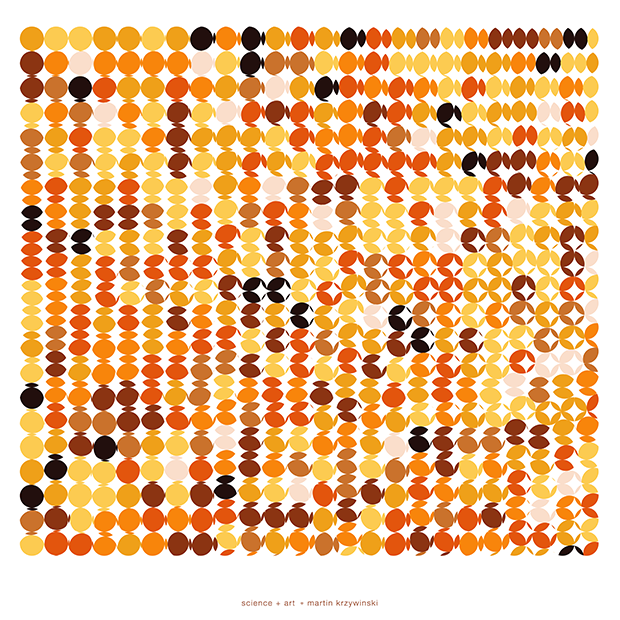

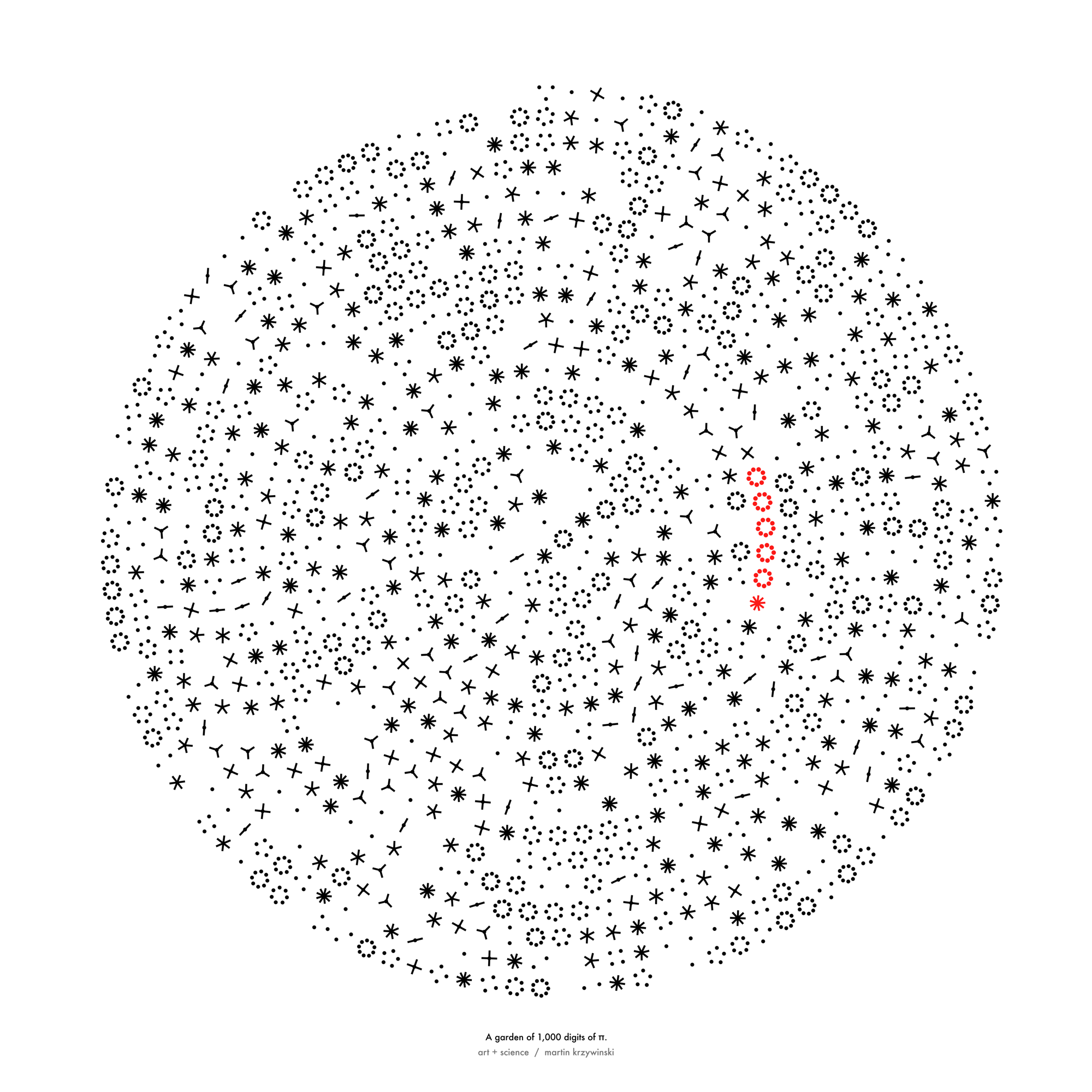

Happy 2024 π Day—

sunflowers ho!

Celebrate π Day (March 14th) and dig into the digit garden. Let's grow something.

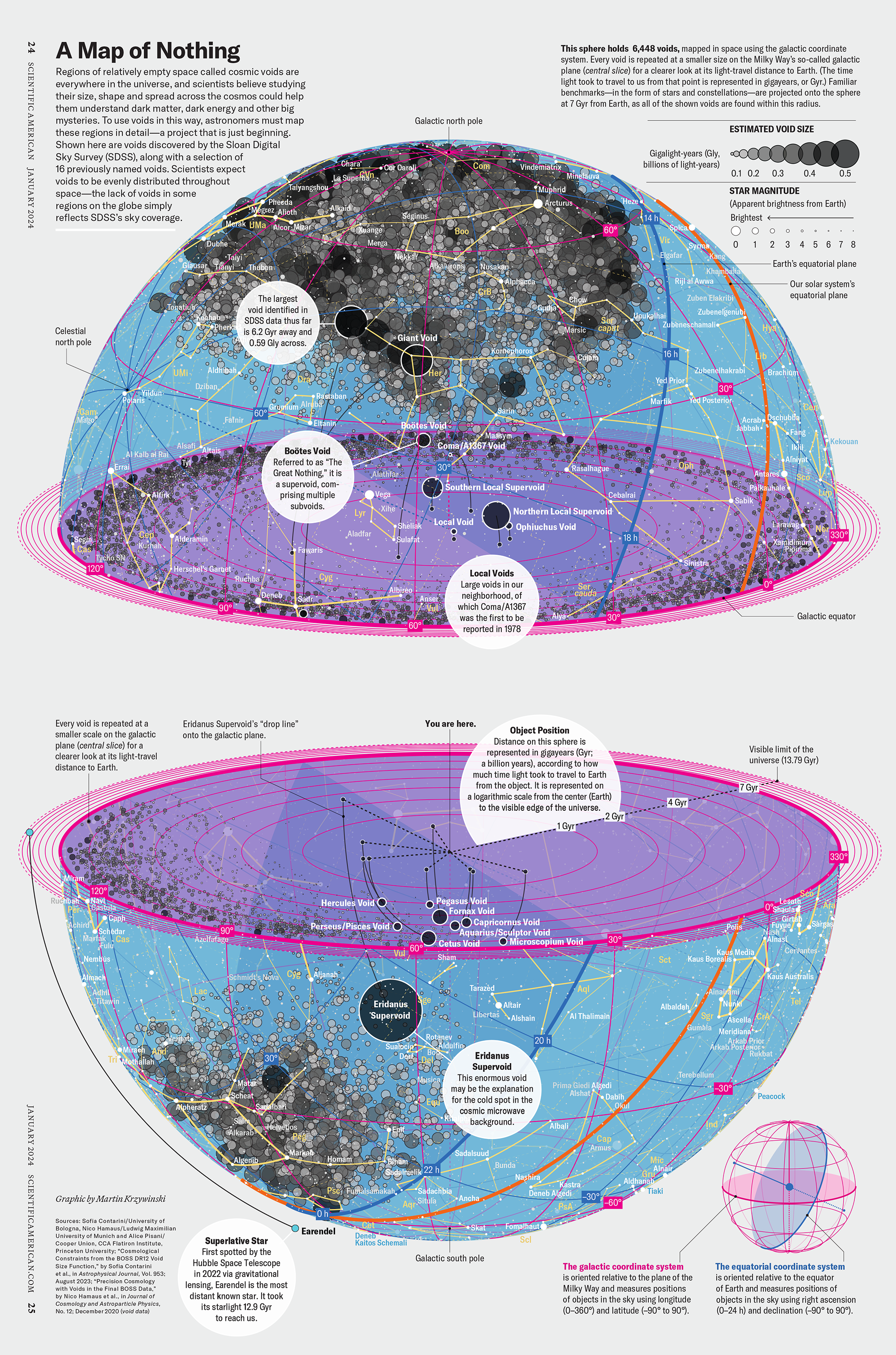

How Analyzing Cosmic Nothing Might Explain Everything

Huge empty areas of the universe called voids could help solve the greatest mysteries in the cosmos.

My graphic accompanying How Analyzing Cosmic Nothing Might Explain Everything in the January 2024 issue of Scientific American depicts the entire Universe in a two-page spread — full of nothing.

The graphic uses the latest data from SDSS 12 and is an update to my Superclusters and Voids poster.

Michael Lemonick (editor) explains on the graphic:

“Regions of relatively empty space called cosmic voids are everywhere in the universe, and scientists believe studying their size, shape and spread across the cosmos could help them understand dark matter, dark energy and other big mysteries.

To use voids in this way, astronomers must map these regions in detail—a project that is just beginning.

Shown here are voids discovered by the Sloan Digital Sky Survey (SDSS), along with a selection of 16 previously named voids. Scientists expect voids to be evenly distributed throughout space—the lack of voids in some regions on the globe simply reflects SDSS’s sky coverage.”

voids

Sofia Contarini, Alice Pisani, Nico Hamaus, Federico Marulli Lauro Moscardini & Marco Baldi (2023) Cosmological Constraints from the BOSS DR12 Void Size Function Astrophysical Journal 953:46.

Nico Hamaus, Alice Pisani, Jin-Ah Choi, Guilhem Lavaux, Benjamin D. Wandelt & Jochen Weller (2020) Journal of Cosmology and Astroparticle Physics 2020:023.

Sloan Digital Sky Survey Data Release 12

Alan MacRobert (Sky & Telescope), Paulina Rowicka/Martin Krzywinski (revisions & Microscopium)

Hoffleit & Warren Jr. (1991) The Bright Star Catalog, 5th Revised Edition (Preliminary Version).

H0 = 67.4 km/(Mpc·s), Ωm = 0.315, Ωv = 0.685. Planck collaboration Planck 2018 results. VI. Cosmological parameters (2018).

constellation figures

stars

cosmology

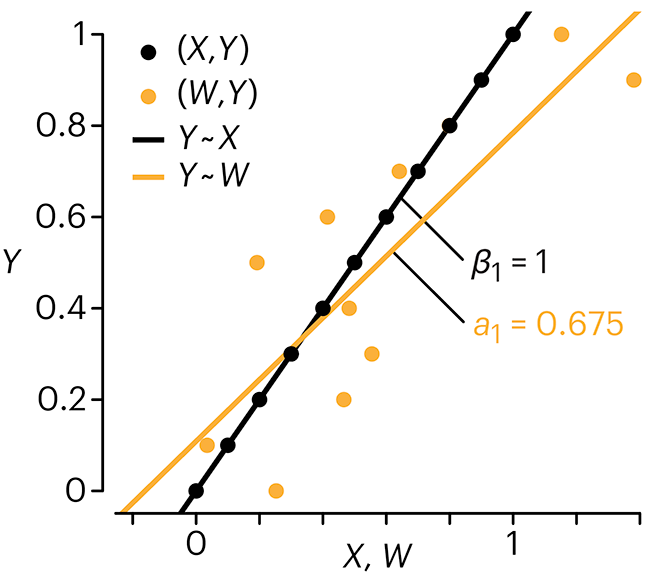

Error in predictor variables

It is the mark of an educated mind to rest satisfied with the degree of precision that the nature of the subject admits and not to seek exactness where only an approximation is possible. —Aristotle

In regression, the predictors are (typically) assumed to have known values that are measured without error.

Practically, however, predictors are often measured with error. This has a profound (but predictable) effect on the estimates of relationships among variables – the so-called “error in variables” problem.

Error in measuring the predictors is often ignored. In this column, we discuss when ignoring this error is harmless and when it can lead to large bias that can leads us to miss important effects.

Altman, N. & Krzywinski, M. (2024) Points of significance: Error in predictor variables. Nat. Methods 20.

Background reading

Altman, N. & Krzywinski, M. (2015) Points of significance: Simple linear regression. Nat. Methods 12:999–1000.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of significance: Logistic regression. Nat. Methods 13:541–542 (2016).

Das, K., Krzywinski, M. & Altman, N. (2019) Points of significance: Quantile regression. Nat. Methods 16:451–452.

Convolutional neural networks

Nature uses only the longest threads to weave her patterns, so that each small piece of her fabric reveals the organization of the entire tapestry. – Richard Feynman

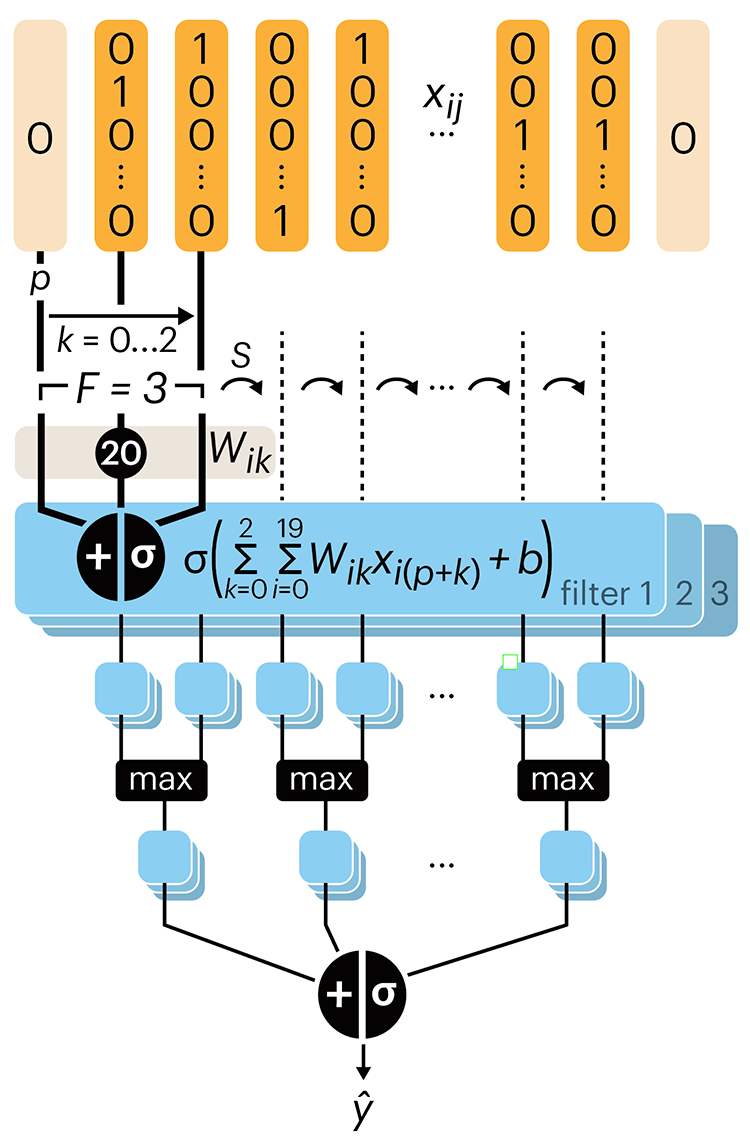

Following up on our Neural network primer column, this month we explore a different kind of network architecture: a convolutional network.

The convolutional network replaces the hidden layer of a fully connected network (FCN) with one or more filters (a kind of neuron that looks at the input within a narrow window).

Even through convolutional networks have far fewer neurons that an FCN, they can perform substantially better for certain kinds of problems, such as sequence motif detection.

Derry, A., Krzywinski, M & Altman, N. (2023) Points of significance: Convolutional neural networks. Nature Methods 20:1269–1270.

Background reading

Derry, A., Krzywinski, M. & Altman, N. (2023) Points of significance: Neural network primer. Nature Methods 20:165–167.

Lever, J., Krzywinski, M. & Altman, N. (2016) Points of significance: Logistic regression. Nature Methods 13:541–542.